How-to: Configure Linux to support WebLogic usage

Overview

This example illustrates how the SSH plugin can be used to configure Linux (specifically the Linux variants Centos and Red Hat) to support WebLogic usage.

Three steps are required to use the plugin:

- Define resources for enabling usage of the plugin

- Define credentials for authentication.

- Define module.

..And finally Pineapple should be invoked to execute the module to configure the OS at the targeted resources.

For information about how to define resource, credentials and modules, refer to the plugin usage page.

Part of the default configuration

This example named ssh-006-configure-os-weblogic-linux64, including all configuration files, is included in the default configuration which is created by Pineapple, so there is no need to create it by hand.

Define resources

A resource defines a entity in a IT environment which is manageable by Pineapple through some protocol. In this example, the entity is a Linux OS and the used protocol is SSH. One resource is defined for each Linux host where the JVM should be installed.

In this example we will use the linux-vagrant environment defined as part of the default configuration. The environment defines a network with three hosts:

- Node1 with IP Address: 192.168.34.10

- Node2 with IP Address: 192.168.34.11

- Node3 with IP Address: 192.168.34.12

To enable SSH usage for these hosts, three resources are defined within the linux-vagrant environment in the resources file located at ${user.home}/.pineapple/conf/resources.xml:

<?xml version="1.0" encoding="UTF-8"?>

<configuration xmlns="http://pineapple.dev.java.net/ns/environment_1_0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://pineapple.dev.java.net/ns/environment_1_0

http://pineapple.dev.java.net/ns/environment_1_0.xsd">

<environments>

<environment description="Vagrant multi-machine Linux environment" id="linux-vagrant">

<resources>

<resource plugin-id="com.alpha.pineapple.plugin.ssh" credential-id-ref="ssh-node1" id="ssh-node1" >

<property value="192.168.34.10" key="host"/>

<property value="22" key="port"/>

<property value="1000" key="timeout"/>

</resource>

<resource plugin-id="com.alpha.pineapple.plugin.ssh" credential-id-ref="ssh-node2" id="ssh-node2" >

<property value="192.168.34.11" key="host"/>

<property value="22" key="port"/>

<property value="1000" key="timeout"/>

</resource>

<resource plugin-id="com.alpha.pineapple.plugin.ssh" credential-id-ref="ssh-node3" id="ssh-node3" >

<property value="192.168.34.12" key="host"/>

<property value="22" key="port"/>

<property value="1000" key="timeout"/>

</resource>

</resources>

</environment>

</environments>

</configuration>

Each resource is defined with a different ID and IP address. All other properties are identical. The role of the attribute plugin-id is to bind the resource definition to the plugin code at runtime which implements the SSH plugin.

Define credentials

Since SSH requires authentication, each resource defines a reference to a credential. A credential defines the user name and password used for authentication when a SSH session is created to a host.

Three credentials are created within the linux-vagrant environment to support authentication. The credentials are defined in the credentials file located at ${user.home}/.pineapple/conf/credentials.xml:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<configuration xmlns="http://pineapple.dev.java.net/ns/environment_1_0">

<environments>

<environment description="Vagrant multi-machine Linux environment" id="linux-vagrant">

<credentials>

<credential password="vagrant" user="vagrant" id="ssh-node1"/>

<credential password="vagrant" user="vagrant" id="ssh-node2"/>

<credential password="vagrant" user="vagrant" id="ssh-node3"/>

</credentials>

</environment>

</environments>

</configuration>

Define module

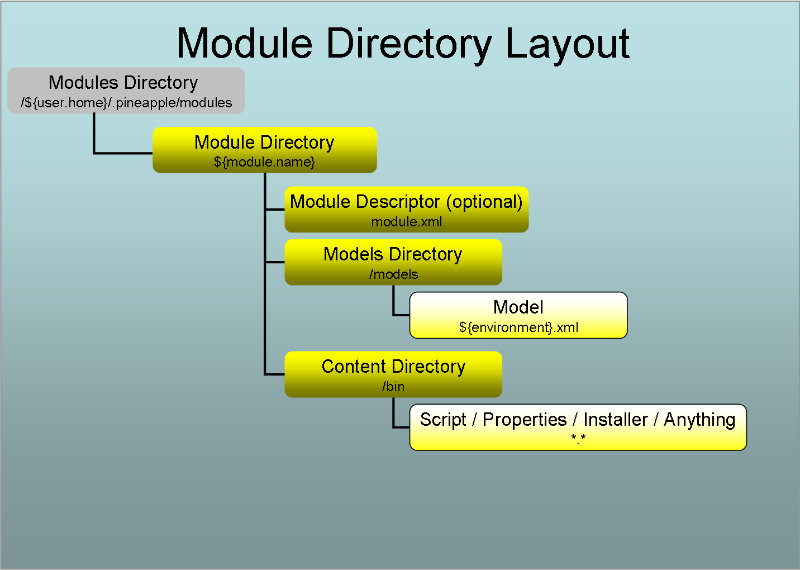

A module defines input to Pineapple and consists in its minimal form of a single XML file containing a model:

The module is located at ${user.home}/.pineapple/modules/ssh-006-configure-os-weblogic-linux64 and it has the structure:

ssh-006-configure-os-weblogic-linux64

|

+--- models

| +--- linux-vagrant.xml

+--- bin

+--- create-users-and-dirs.sh

Define model

In the models directory, a model file named linux-vagrant is defined with the content:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<mmd:models xmlns:mmd="http://pineapple.dev.java.net/ns/module_model_1_0"

xmlns:shp="http://pineapple.dev.java.net/ns/plugin/ssh_1_0" >

<mmd:model target-resource="regex:ssh-node.*">

<mmd:content>

<shp:ssh>

<shp:copy-to source="modulepath:bin/create-users-and-dirs.sh" destination="/tmp/create-users-and-dirs.sh"/>

<shp:execute command="sudo chmod +x /tmp/create-users-and-dirs.sh" />

<shp:execute command="sudo /tmp/create-users-and-dirs.sh" />

</shp:ssh>

</mmd:content>

</mmd:model>

</mmd:models>

The configuration details

Two schema are used in the model file. The http://pineapple.dev.java.net/ns/module_model_1_0 is used to define the namespace mmd which defines the general infrastructure for models. The http://pineapple.dev.java.net/ns/plugin/ssh_1_0 schema is used to define the namespace shp which is used to define the model for the SSH plugin. Since multiple schemas are used to define the model file, the elements are qualified.

The target-resource attribute defines a reference to the resource which is targeted when the model executed. In this case, the value regex:ssh-node.* defines a regular expression for targeting multiple resources, e.g. all the resources starting with ssh-node.

The main part of the model consists of three commands, which performs the configuration:

- Copy a shell script from the bin directory within the module to tmp directory on the accessed host. The usage of the modulepath: variable in the source file attribute defines the source directory relative to module.

- Change execution rights for the shell script.

- Configures the OS by executing the shell script.

The execute commands in the model is executed using sudo. The requirement for sudo depends on the privileges of the user used by the SSH plugin to connect to a given host. The users was defined in credentials file in the previous section.

Configuration done by the shell script

The shell script /bin/create-users-and-dirs.sh is defined with the content:

#!/bin/bash # create users /usr/sbin/groupadd oinstall /usr/sbin/groupadd oracle /usr/sbin/useradd -m -g oinstall -G oracle weblogic # set sudoers privileges echo 'Cmnd_Alias FMW_OP_CMDS=/sbin/ifconfig, /sbin/arping' >> /etc/sudoers echo '%oinstall ALL = NOPASSWD: FMW_OP_CMDS' >> /etc/sudoers # create binaries directories mkdir -p /u01/app/oracle/product/fmw chown -R weblogic:oinstall /u01/app/oracle/product/fmw chmod -R 775 /u01/app/oracle/product/fmw # create shared directories mkdir -p /u01/app/oracle/admin mkdir -p /u01/app/oracle/admin/shared/aservers mkdir -p /u01/app/oracle/admin/shared/mservers mkdir -p /u01/app/oracle/admin/shared/certs mkdir -p /u01/app/oracle/admin/shared/clusters chown -R weblogic:oinstall /u01/app/oracle/admin chmod -R 775 /u01/app/oracle/admin # create unshared node manager directories mkdir -p /u01/app/oracle/admin/nodemanager chown -R weblogic:oinstall /u01/app/oracle/admin/nodemanager chmod -R 775 /u01/app/oracle/admin/nodemanager # create shared application directories mkdir -p /u01/app/oracle/admin/appfiles chown -R weblogic:oinstall /u01/app/oracle/admin/appfiles chmod -R 775 /u01/app/oracle/admin/appfiles # create log directories mkdir -p /u01/logs/domains mkdir -p /u01/logs/nodemanager chown -R weblogic:oinstall /u01/logs chmod -R 775 /u01/logs

Users and groups

The script creates two groups:

- The oinstall group which owns the installed WebLogic binaries.

- The oracle group which is the secondary group for the weblogic user.

The user weblogic is created to run the WebLogic software (e.g. server instances and the node manager) to achive separation from from the root user. The primary group of the weblogic user is oinstall.

Sudo privileges to support whole server migration

Support for whole server migration in WebLogic requires that the user running the nodemamanger has privileges to dynamically assign and remove IP addresses on required NIC's when a managed server is started, stopped or migrated.

Dynamic assignment of IP addresses is done by the node manager through the usage of the WebLogic supported script wlsifconfig.sh. This script uses two OS commands to implement the assignment/removal: ifconfig to assign IP's to NIC's and arping to broadcast assignments.

The wlsifconfig.sh requires that the two OS commands can be executed with sudo rights without the need for entering passwords. To support this requirement, an alias is defined for the two commands in the /etc/sudoers file and then all group member of the oinstall group is given permission to sudo these two commands with no password.

Directories

Directories are created for operation of WebLogic. The main point it that the directories are created with the chmod mask 775 for with the owner weblogic:oinstall:

- rwx for user weblogic.

- rwx for group members of the group oinstall.

- r-x for others

The white paper Oracle WebLogic on Shared Storage: Best Practices is used as inspiration for the created directories.